Abstract

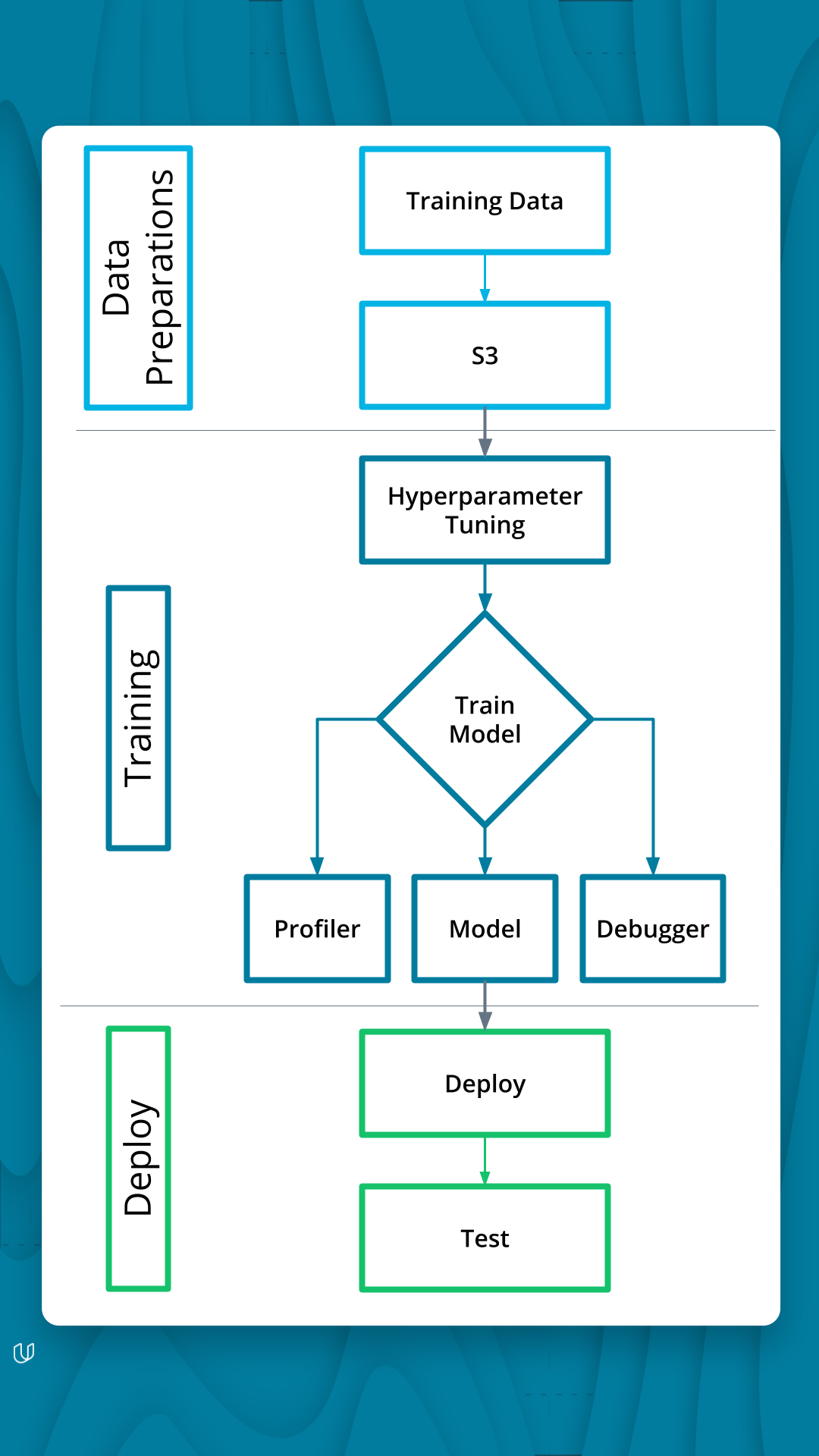

In this project, we will be using AWS Sagemaker to finetune a pretrained model that can perform image classification. We will use Sagemaker profiling, debugger, hyperparameter tuning and other good ML engineering practices to finish this project.

The dataset will be dog breed classification

Dataset

The dataset contains images from 133 dog breeds divided into training, testing and validation datasets.

Dataset is a set of pictures of different dog types (Golden Retriever, Akita, etc).

Data is stored in S3

# Command to download and unzip data

!wget https://s3-us-west-1.amazonaws.com/udacity-aind/dog-project/dogImages.zip

!unzip dogImages.zip

Session setup

session = sagemaker.Session()

bucket= session.default_bucket()

role = sagemaker.get_execution_role()

region = session.boto_region_name

Store data

os.environ["DEFAULT_S3_BUCKET"] = bucket

!aws s3 sync ./dogImages s3://${DEFAULT_S3_BUCKET}/dogImages

Hyperparameter Tuning

import sagemaker

from sagemaker.tuner import (

IntegerParameter,

CategoricalParameter,

ContinuousParameter,

HyperparameterTuner,

)

hyperparameter_ranges = {

"lr": ContinuousParameter(0.001, 0.1),

"batch-size": CategoricalParameter([32, 64, 128, 256, 512]),

"epochs": IntegerParameter(2, 4)

}

objective_metric_name = "accuracy"

objective_type = "Maximize"

metric_definitions = [{"Name": "accuracy", "Regex": "\((\d+)%\)"}]

Estimators for Hyperparameters

The hyperparameters which we are tuning are

lr

epochs

batch-size

estimator = PyTorch(

entry_point="hpo.py",

role=role,

py_version='py36',

framework_version="1.8",

instance_count=1,

instance_type="ml.m5.xlarge"

)

tuner = HyperparameterTuner(

estimator,

objective_metric_name,

hyperparameter_ranges,

metric_definitions,

max_jobs=4,

max_parallel_jobs=4,

objective_type=objective_type,

)

Fit Hyperparameter Tuner

train_images_path = f"s3://{bucket}/dogImages/train"

print(train_images_path)

validation_images_path = f"s3://{bucket}/dogImages/valid"

print(validation_images_path)

tuner.fit({"train": train_images_path, "test": validation_images_path})

s3://sagemaker-us-east-1-820131057864/dogImages/train

s3://sagemaker-us-east-1-820131057864/dogImages/valid

.........................!

Get the best estimators and the best HPs

best_estimator = tuner.best_estimator()

#Get the hyperparameters of the best trained model

best_estimator.hyperparameters()

{'_tuning_objective_metric': '"accuracy"',

'batch-size': '"256"',

'epochs': '4',

'lr': '0.002564503867458704',

'sagemaker_container_log_level': '20',

'sagemaker_estimator_class_name': '"PyTorch"',

'sagemaker_estimator_module': '"sagemaker.pytorch.estimator"',

'sagemaker_job_name': '"pytorch-training-2023-02-14-07-57-50-222"',

'sagemaker_program': '"hpo.py"',

'sagemaker_region': '"us-east-1"',

'sagemaker_submit_directory': '"s3://sagemaker-us-east-1-820131057864/pytorch-training-2023-02-14-07-57-50-222/source/sourcedir.tar.gz"'}

Model Profiling and Debugging

Set up debugging and profiling rules and hooks

from sagemaker.debugger import Rule, ProfilerRule, rule_configs

rules = [

ProfilerRule.sagemaker(rule_configs.LowGPUUtilization()),

ProfilerRule.sagemaker(rule_configs.ProfilerReport()),

Rule.sagemaker(rule_configs.vanishing_gradient()),

Rule.sagemaker(rule_configs.overfit()),

]

from sagemaker.debugger import DebuggerHookConfig, ProfilerConfig, FrameworkProfile

profiler_config = ProfilerConfig(

system_monitor_interval_millis=500, framework_profile_params=FrameworkProfile(num_steps=10)

)

debugger_config = DebuggerHookConfig(

hook_parameters={"train.save_interval": "10", "eval.save_interval": "1"}

)

hyperparameters = {

"batch-size": "256",

"epochs": 4,

"lr": "0.002564503867458704",

}

Create an estimator

from sagemaker.pytorch import PyTorch

estimator = PyTorch(

role=sagemaker.get_execution_role(),

instance_count=1,

instance_type="ml.m5.2xlarge",

source_dir=".",

entry_point="train_model.py",

framework_version="1.8",

py_version="py36",

hyperparameters=hyperparameters,

profiler_config=profiler_config,

debugger_hook_config=debugger_config,

rules=rules,

)

estimator.fit({"train": train_images_path, "test": validation_images_path}, wait=True)

2023-02-14 09:18:46 Completed - Training job completed

VanishingGradient: NoIssuesFound

Overfit: NoIssuesFound

LowGPUUtilization: NoIssuesFound

ProfilerReport: IssuesFound

Training seconds: 1251

Billable seconds: 1251

Debugger Report

from smdebug.trials import create_trial

from smdebug.core.modes import ModeKeys

trial = create_trial(estimator.latest_job_debugger_artifacts_path())

[2023-02-14 11:15:04.165 datascience-1-0-ml-g4dn-xlarge-94fad2f4401e538ca1255dfa1e84:125 INFO utils.py:27] RULE_JOB_STOP_SIGNAL_FILENAME: None

[2023-02-14 11:15:04.176 datascience-1-0-ml-g4dn-xlarge-94fad2f4401e538ca1255dfa1e84:125 INFO s3_trial.py:42] Loading trial debug-output at path s3://sagemaker-us-east-1-820131057864/pytorch-training-2023-02-14-08-54-36-852/debug-output

Model Deployment

from sagemaker.pytorch import PyTorchModel

pytorch_model = PyTorchModel(model_data=estimator.model_data,

role=role,

entry_point='deply.py',

py_version='py36',

framework_version='1.8')

predictor = pytorch_model.deploy(initial_instance_count=1, instance_type='ml.m5.xlarge')